The rise of ChatGPT and BARD based on large language models (LLMs) has shown the potential of generative models to assist with a variety of tasks. However, we want to highlight how generative models can be used in many more areas than just language generation, with one particularly promising area: molecule generation for chemical product development.

Conventional discovery of new molecules is often done through expensive and time-consuming trial-and-error approaches. This means that scientists may miss many potential candidates. Instead, generative models can be used to explore molecular spaces and discover molecules that have not been synthesized in the lab or even theorized in simulations. These novel molecules could therefore be patented.

Generative Models: A New Method for Molecule Discovery

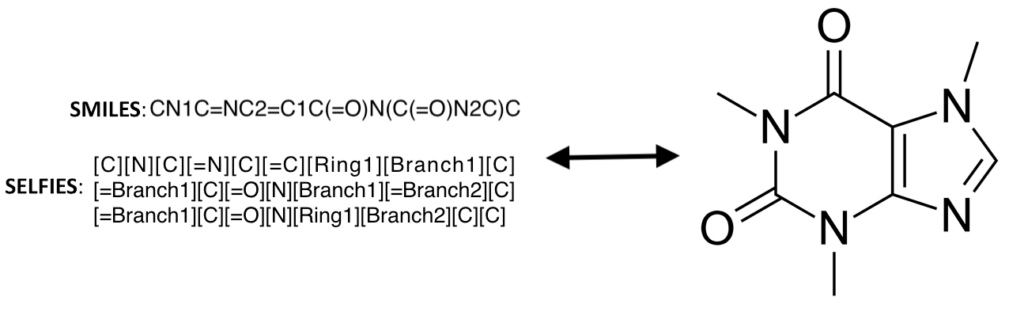

In the generative models, an important aspect is the molecules representation, with some of the more common representations being molecular graphs, SMILES, and SELFIES. SELFIES have the additional advantage that every SELFIE string is a valid molecule. This allows for SELFIES to be mutated or generated from latent space and still represent a valid molecule. These molecule representations are the input to the generative model, with some of the more popular generative models being variational autoencoders (VAEs), generative adversarial networks (GANs), and normalizing flow.

SELFIES, SMILES, and molecular graph representations of the caffeine molecule

These generative models are unsupervised models, which means they don’t require knowing the properties of the molecules (i.e. unlabeled). The models can therefore be trained on large databases of molecules even if they don’t have the properties of interest. For example, the models can be trained on large existing open-source molecule databases, such as ChEMBL or QM9, which significantly reduces the cost and complexity of data collection.

Challenges and Solutions for Generation Models

However, there are some problems with this approach. For example, the generated molecules might not satisfy all property requirements, might be difficult and costly to synthesize, or may not meet other business requirements like manufacturability. This can be especially difficult when there are multiple business requirements. Fortunately, there are several different solutions to this.

One option is to use a funnel or filter approach. In this approach, many molecules are generated and then each molecule is checked to see if it passes certain requirements. If it doesn’t pass the requirement, the molecule is removed from the potential molecules. Then the remaining molecules will be tested on the next filter until all filters have been tested. These filters can be a simulation, experiment, or even a machine learning (ML) model. While this approach is rather simple, there are some advantages to it. For one, cheaper filters can be used first to reduce the number of candidate molecules, reducing the overall cost of discovering a new molecule. The cheap filters are any test that can be done quickly and at a low cost for many molecules, such as a ML model or simulation. However, this approach can still be very expensive if the filters are expensive, with some lab experiments and simulations being costly and time-consuming to run.

In the case where there are no robust and cheap models, another approach is to guide the exploration of these novel regions of chemical space using methods such as Bayesian optimization or active learning. Bayesian optimization would be used to find the best molecule in the chemical space, whereas active learning would be used to create accurate and robust models. The active learning approach would also be useful for creating an ML model which could be used as a cheap filter in the filter approach.

If the generative model represents the molecules in a latent space, such as in a VAE and normalizing flow, one could instead train a machine learning model to predict the given properties from the latent space. The problem can then be treated as an inverse design problem, where promising candidates are optimized in the latent space. Then the latent space representation can be transformed back into a molecule for further exploration. These methods allow for gradient-based techniques to be used to find the best molecule.

A different approach is to instead train your generative model on molecules that are already known to pass your requirements, such as synthesis cost and manufacturability. So instead of learning the distributions of all molecules, the generative model will instead learn the distribution of molecules that pass your requirements. The new generative model would then generate molecules that pass your requirements. However, this requires the data to be labeled, essentially converting the problem from an unsupervised task to a supervised one. To reduce the data requirements, one can start with a pre-trained generative model and then tune the model. The major advantage of this approach is that the molecules are more likely to pass your requirements, so fewer molecules have to be generated and tested.

Staying Ahead of the Curve

While there are several important factors to consider when utilizing these generative models for molecules, these technologies are becoming mature enough such that valuable and industrially viable materials informatics software solutions can now be built. Integrating generative methods into your workflows can help quickly identify promising candidates that meet all of your design requirements since the generative models for molecules can be thought of as giving you access to an infinite database of promising new molecules. Innovation leaders are investing in learning how to leverage these tools in their R&D teams to give them a competitive advantage, especially when pursuing new markets with high growth potential and room for major chemical innovation.

Watch Webinar-on-Demand: Materials Informatics for Product Development: Deliver Big with Small Data

Related Content

産業用の材料と化学研究開発におけるLLMの活用

大規模言語モデル(LLM)は、すべての材料および化学研究開発組織の技術ソリューションセットに含むべき魅力的なツールであり、変革をもたらす可能性を秘めています。

デジタルトランスフォーメーション vs. デジタルエンハンスメント: 研究開発における技術イニシアティブのフレームワーク

生成AIの登場により、研究開発の方法が革新され、前例のない速さで新しい科学的発見が生まれる時代が到来しました。研究開発におけるデジタル技術の導入は、競争力を向上させることが証明されており、企業が従来のシステムやプロセスに固執することはリスクとなります。デジタルトランスフォーメーションは、科学主導の企業にとってもはや避けられない取り組みです。

R&D イノベーションサミット2024「研究開発におけるAIの大規模活用に向けて – デジタル環境で勝ち残る研究開発組織への変革」開催レポート

去る2024年5月30日に、近年注目のAIの大規模活用をテーマに、エンソート主催のプライベートイベントがミッドタウン日比谷6FのBASE Qで開催されました。

科学研究開発における小規模データの最大活用

多くの伝統的なイノベーション主導の組織では、科学データは特定の短期的な研究質問に答えるために生成され、その後は知的財産を保護するためにアーカイブされます。しかし、将来的にデータを再利用して他の関連する質問に活用することにはあまり注意が払われません。

デジタルトランスフォーメーションの実践

デジタルトランスフォーメーションは、組織のデジタル成熟度を促進し、ビジネス価値を継続的に提供するプロセスです。真にビジネスを変革するためには、イノベーションを通じて新しい可能性を発見し、企業が「デジタルDNA」を育む必要があります。

科学研究開発リーダーが知っておくべき AI 概念トップ 10

近年のAIのダイナミックな環境で、R&Dリーダーや科学者が、企業の将来を見据えたデータ戦略をより効果的に開発し、画期的な発見に向けて先導していくためには、重要なAIの概念を理解することが不可欠です。

科学における大規模言語モデルの重要性

OpenAIのChatGPTやGoogleのBardなど、大規模言語モデル(LLM)は自然言語で人と対話する能力において著しい進歩を遂げました。 ユーザーが言葉で要望を入力すれば、LLMは「理解」し、適切な回答を返してくれます。

ITは科学の成功にいかに寄与するか

科学と工学の分野においてAIと機械学習の重要性が高まるなか、企業が革新的であるためには、研究開発部門とIT部門のリーダーシップが上手く連携を取ることが重要になっています。予算やポリシー、ベンダー選択が不適切だと、重要な研究プログラムが不必要に阻害されることがあります。また反対に、「なんでもあり」という姿勢が貴重なリソースを浪費したり、組織を新たなセキュリティ上の脅威にさらしたりすることもあります。

科学データを活用して発見とイノベーションを加速する

デジタルトランスフォーメーションがもたらす変革の中心にはデータがあります。研究開発におけるデジタルトランスフォーメーションでは科学データを取り扱いますが、科学データには他の業務データと異なる特徴があり、取り扱い方に注意を払う必要があります。